Mitigating AI risks: Essential strategies for PMs

Jun 27, 2024

Want to become more technical in just 4 weeks? Find out how the Skiplevel program can help.

In April 2023, OpenAI experienced a data breach where the personal data of some users, including payment information and chat histories, was inadvertently exposed. The incident occurred due to a bug in an open-source library used by OpenAI, which allowed some users to see titles from other users' chat histories.

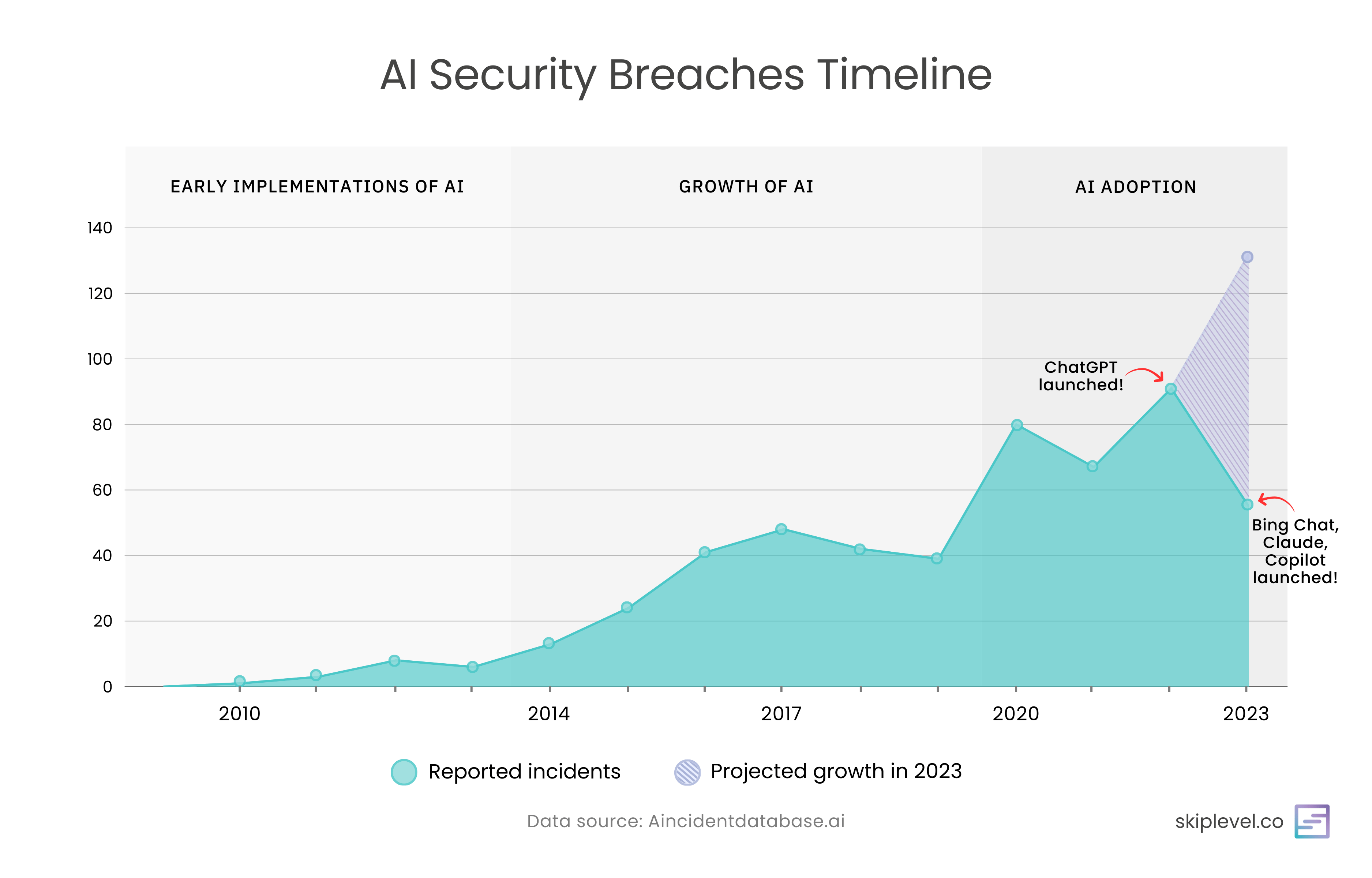

As AI continues to be widely adopted, security breaches has also increased with that increase in adoption. In fact, according to incidentdatabase.ai, 2023 is predicted to be the highest number of AI incidents.

AI's evolution has been nothing short of incredible, but it comes with a significant concern: the safety of our data.

This begs the question: As PMs increasingly rely on AI to do their jobs and more and more app include AI features, how do we mitigate the security risks inherent to using and developing AI products?

That’s what we’ll explore in this essay. I’m going to break down the types of security risks posed by AI, then share action items that you can both implement immediately and implementation strategies to protect data against AI security risks when working with devs to develop AI products.

TL;DR

|

Security Risk |

How to mitigate |

|---|---|

|

Insider Threats |

Role-based access control policies |

|

Insecure API Integrations |

Monitor activity for anomalies |

|

Model Inversion Attacks |

Avoid sharing sensitive information |

|

Data Poisoning |

Verify your data source |

|

Data Leakage |

Monitor activity for anomalies |

Security risks when using AI

These involve risks from both within the organization and external actors exploiting weak security measures or deceiving employees to obtain access to data that they aren’t authorized to have.

-

Insider Threats

When employees or insiders misuse their access to steal or expose sensitive data. For example: You discover that an employee had unauthorized access to sensitive data and AI models and used that access to get personal information on a specific customer. -

Insecure API Integrations

Weak or poorly secured connections between systems can let attackers access data. For example: During a routine security audit, you find that some API endpoints in your AI product lack proper authentication, making them vulnerable to attacks.

The below risks focus on protecting the accuracy, privacy, and confidentiality of the data used and produced by AI systems.

-

Model Inversion Attacks

When hackers reverse-engineer AI models to uncover the sensitive data they were trained on. For example: as part of developing a new personalized recommendation feature, you and your team use real user data to train the AI model. Malicious actors can reverse-engineer AI models to extract the sensitive training data. -

Data Poisoning

Malicious data is inserted into the training set, causing the AI to make mistakes. For example: let’s imagine your website includes inputs for users to enter data that is used to train your AI model. Malicious actors can input false data into your training datasets which ends up skewing the model's outputs. -

Data Leakage

A security flaw or mistake causes private information to be exposed unintentionally. For example: your website uses a AI chatbot for handling customer queries with some customers unintentionally entering sensitive information. A bug in your code exposes user chat histories along with that sensitive info.

Immediate action items to protect your content

1. Avoid sharing sensitive information

The simplest way to protect your data is not to share it with AI in the first place by refraining from sharing any personal or sensitive information in your AI prompts.

This can be as simple as setting a team policy to refrain from using your company or product name to prevent competitors from accessing business sensitive information about your company, or refraining from entering Personally Identifiable Information (PII) data such as passport and credit card information.

Instead, use general terms and descriptors. For example, instead of sharing detailed financial figures when seeking advice from an AI chatbot, use general terms like "revenue increased by a significant percentage." This minimizes the risk of exposing critical personal and business data.

2. Anonymize sensitive data

It’s not always possible to avoid sharing personal information since AI responses are more accurate the more context and examples you provide it. In this case, anonymize it!

For instance, if you are analyzing customer feedback using an AI tool, replace real names and contact details with pseudonyms or unique identifiers. You can also slightly modify the data to still provide the necessar level of accuracy but is effectively useless if unintended users or malicious actors gain access to the information.

For example: In a real-world scenario, a healthcare provider might anonymize patient records before using AI to identify treatment trends. This protects individual identities while providing AI with the data it needs for its model.

3. Update your privacy settings

Generally, AI companies use your prompts and inputs to train their models, which means any data you share is at risk of being accessed by unauthorized users using the same AI model.

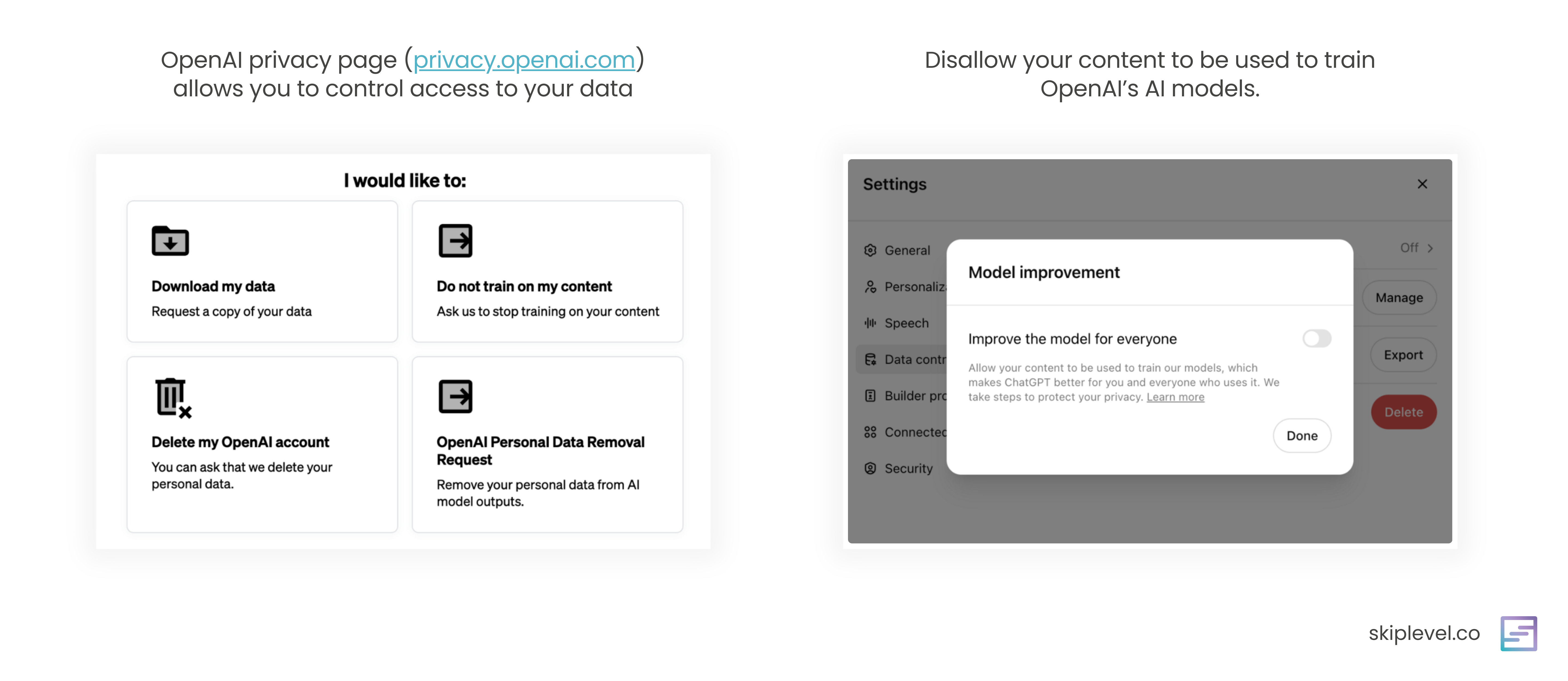

Privacy laws exist to protect users' data, some of which has AI-specific provisions. These laws, such GDPR (EU), ADPPA (American Data Privacy and Protection Act) and the AI Act (EU), often require AI companies to allow users to opt-out of data usage for training models, request access to the data stored about them, and delete their data.

This means no matter what your preferred AI bot is, you have the option to control access to your data, so make sure you’re setting these controls before using them!

ChatGPT has a privacy page where you can ask for your personal data to be deleted and ask for your content not be used to train their model. If you have an OpenAI account, you also want to turn off “Improve the model for everyone” under your data control settings.

If you’re using or integrating with a third-party AI tool, check if your data will be used for training the model. If so, ask to opt out.

4. Use role-based access control policies for your team

To protect against insider threats where employees or insiders misuse their access to steal or expose sensitive data, make sure to set up strong access controls within your organization by using role-based access control (RBAC).

This makes sure that only authorized personnel can access sensitive data and prevents anyone that’s not in your immediate team from accessing sensitive data. For example, sensitive project details should only be accessible to team members directly involved in the project.

Strategies for managing risk when developing AI

Managing risk and responsible AI is a core competency of AI product management. As such, all of the below strategies are important to know if you’re currently an AI PM or hope to transition into the role someday.

1. Verify your data source

An effective way to mitigate the risks of insecure API integrations and data poisoning is to verify the data source first. As a PM, this means working closely with your dev and data team to come up with protocols that only allow data from trusted and verified sources such as identifying and/or whitelisting.

This is as simple as just gating all user text inputs used to train your AI model behind account authentication.

For extra security, you can whitelist accounts so you have a high degree of confidence that any data that enters your AI model is high-quality and trustworthy.

Of course, this comes with additional overhead for your team to whitelist individual accounts and users may need to apply to be whitelisted (depending on your requirements). You’ll want to take into consideration time, effort, and customer experience when deciding on protocols.

2. Monitor activity for anomalies

Even with data source verification, it’s important to monitor for unusual activity by identifying unusual patterns or outliers in your datasets. This is especially effective for catching data poisoning attempts.

Luckily, there are automated ways using machine learning to flag for out-of-the-norm activity using Statistical and Machine learning-based anomaly detection techniques.

Statistical anomaly detection refers to the usage of statistical measures like averages and deviations to identify unusual data points. Machine learning-based techniques use unsupervised learning models (e.g. Autoencoders or One-Class SVM) to learn patterns in the data and flag anything that doesn't fit usual patterns.

As a product manager developing AI products, you should advocate for the integration of these techniques into your app’s data pipelines.

3. Incorporate adversarial training

If you’re an AI PM developing AI products and features, security is an important aspect of AI product management, so you have to always keep in mind the worse case scenario.

An effective strategy is to work with your dev team to incorporate adversarial training to proactively defend against malicious attacks. This involves actively integrating adversarial examples into the model training process.

Adversarial examples are inputs intentionally designed to deceive the model into making incorrect predictions. This forces the AI model to learn how to handle the sorts of deceptive inputs you train it on, which increases the resilience of your AI model.

For example, if a user inputs offensive content, how should the AI model respond? Does the dev team need to be alerted? What about when the user unintentionally enters sensitive personal data like payment or account information?

Adversarial training is a great and proactive way to ensure that your AI models are robust and secure against potential attacks, but also involves a lot of resourcing, planning and implementation. As a PM you’ll be expected to work closely with your dev team to come up with adversarial scenarios and how the AI model should respond to it.

Sign up for the Skiplevel newsletter to get more content like this straight to your inbox.

Become more technical without learning to code with the Skiplevel program.

The Skiplevel program is specially designed for the non-engineering professional to give you the strong technical foundation you need to feel more confident in your technical abilities in your day-to-day role and during interviews.